Handling Mouse and Touch Input¶

libavg provides a number of ways to handle mouse and touch input:

- The traditional

CURSOR_DOWN,CURSOR_UP, etc. events based on theCursorEventclass that are triggered when a touch or a mouse event happens on a node. Contactsthat allow apps to react to MOVE or UP events of one individual touch.- Gesture recognizers that detect certain gestures and trigger events based on the gestures.

In many cases, the Contact class and gesture recognizers make it possible to write much more compact code than the traditional node events do.

In libavg 1.8 or earlier, the environment variable AVG_MULTITOUCH_DRIVER needs to be set to select an input source. In current libavg, touch is enabled by default; if you have a TUIO event source, set AVG_ENABLE_TUIO. Alternatively, touch configuration can be set using the command line or parameters in the call to app.start(). Start any libavg app with --help as parameter to get a listing of the command line parameters.

CursorEvents¶

CursorEvents are triggered whenever a user clicks on or touches a Node (for a discussion of how libavg determines which node should be the recepient of an event, see BubblingAndCapture. The design of the CursorEvent handling system follows established practice; if you've done any javascript event handling stuff, used GTK, MFC or anything similar, you'll find things very familiar. The Events page contains an example of a cursor event handler.

CursorEvent handling routines get passed one parameter, event. It contains information about exactly what happened - among others, the cursor position, which buttons (if any) are pressed, and which input source (mouse, multitouch surface or tracker) the event is coming from. It also carries a reference to the Contact that caused the event. For more information on the contents of the CursorEvent object, have a look at the reference.

libavg supports listening to up, down and move as well as over and out events for cursor event sources. All of these handlers can be attached to any avg node, including DivNodes.

Contacts¶

In mouse or single-touch environments, there is one mouse cursor. This cursor exists during the lifetime of the application. When using a multitouch device, touches are transient - they appear and disappear many times during an application's lifetime. libavg object provides a Contact class that allows easy handling of transient touches. Contacts are objects that correspond to one touch and exist from the down to the up event.

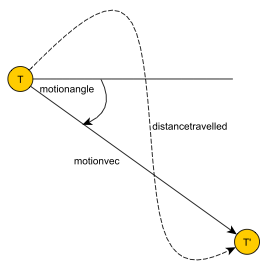

Every cursor event contains a reference to the corresponding contact, and it is possible to register per-contact callbacks. These are invoked whenever a specific contact moves or disappears. In addition, often needed per-contact data is precalculated. Contacts can be queried for their vector, distance and angle from the start position as well as age and total distance travelled. Additionally, all previous events that this contact generated are available. (Contact image by Christian Jäckel.)

The following code illustrates the concept. It draws a circle around every touch point:

1class TouchVisualization(avg.CircleNode):

2

3 def __init__(self, event):

4 avg.CircleNode.__init__(self,

5 pos=event.pos, r=10, parent=rootNode)

6 event.contact.connectListener(self._onMotion,

7 self._onUp)

8

9 def _onMotion(self, event):

10 self.pos = event.pos

11

12 def _onUp(self, event):

13 self.unlink(True)

14

15def onTouchDown(event):

16 TouchVisualization(event)

17

18rootNode.connectEventHandler(avg.CURSORDOWN, avg.TOUCH,

19 None, onTouchDown)

To implement this using standard event handlers, the application would need to manage a map of TouchID->TouchVisualization objects and route motion events to the correct visualization object based on the map. Contrast this with the extremely simple code above.

Contacts form the basis of the other two libavg features geared towards natural user interfaces: Gesture recognizers and touch visualization.

Gesture Recognizers¶

libavg contains built-in recognizers for most gestures common in today's touch interfaces. Taps, doubletaps, drags, holds, pinch-to-zoom transforms and their variations have corresponding recognizers that can be attached to nodes. The gesture recognizers are divided into two types: Continuous and non-continuous. Non-continuous recognizers like the TapRecognizer simply deliver an event when the gesture is recognized. Continuous recognizers like the DragRecognizer start continuously delivering events as soon as the gesture is recognized.

Here is an example of the simplest type of recognizer. The TapNode changes it's color when a tap is recognized:

1class TapNode(TextRect):

2 def __init__(self, text, isDoubleTap, **kwargs):

3 super(TapNode, self).__init__(text, **kwargs)

4

5 self.recognizer = ui.TapRecognizer(node=self,

6 detectedHandler=self.__onDetected)

7

8 def __onDetected(self, event):

9 self.rect.fillcolor = "000000"

10 self.words.color = "FFFFFF"

11 self.rect.color = "00FF00"

This is how a standard NUI picture drag/rotate/zoom with inertia is coded in libavg:

1class TransformNode(TextRect):

2 def __init__(self, text, **kwargs):

3 super(TransformNode, self).__init__(text, **kwargs)

4

5 self.recognizer = ui.TransformRecognizer(

6 eventNode=self,

7 detectedHandler=self.__onDetected,

8 moveHandler=self.__onMove,

9 friction=0.02)

10

11 def __onDetected(self, event):

12 moveNodeToTop(self)

13

14 def __onMove(self, transform):

15 transform.moveNode(self)

Gesture recognizers are quite powerful: They deliver several additional callbacks and they can be tailored to only respond to specific sub-types of gestures (like horizontal or vertical drags). Using the initialEvent attribute, recognizers can also be chained - for instance, to use a hold to enable a drag. The DragRecognizer and TransformRecognizer classes support inertia - simple physics that keep objects moving after they are released. It is easy to constrain the movements the TransformRecognizer allows to certain sizes or angles.

It is also possible to attach several gesture recognizers to a single node. In this case, the recognizers do not know about each other. For instance, if you have a TapRecognizer and a DoubletapRecognizer attached to one node, the TapRecognizer will be triggered in case of a doubletap as well.

The examples are adapted from src/samples/gestures.py, which contains examples for all the features mentioned in the preceding paragraph. The reference for the recognizer classes is at http://www.libavg.de/reference/svn/ui.html.

Touch Visualization¶

Note:

Added post-1.8

There is a fundamental difference between the way mouse and multitouch input is implemented on most of today's systems. When the user moves a mouse, he gets immediate feedback because the mouse pointer moves on the screen. This tells him that the device is actually functioning and interpreting his actions correctly. Touch devices generally lack this feedback, so the user has no way of knowing whether the input device is functioning correctly. As a remedy, libavg supports a simple touch visualization that can be turned on using

1class MyMainDiv(app.MainDiv):

2 def onInit(self):

3 self.toggleTouchVisualization()

4 [...]